kibana geo_point How to Part 2

Step:

.Change Kibana & elk order. Now elk import template_filebeat, then wait logstash put log to elk. elk can get index EX:filebeat-6.4.2-2018.11.19 filebeat-6.4.2-2018.11.20

Then kibana import index-partten and set default.

#!/bin/bash

echo '@edge http://dl-cdn.alpinelinux.org/alpine/edge/main' >> /etc/apk/repositories

echo '@edge http://dl-cdn.alpinelinux.org/alpine/edge/community' >> /etc/apk/repositories

echo '@edge http://dl-cdn.alpinelinux.org/alpine/edge/testing' >> /etc/apk/repositories

apk --no-cache upgrade

apk --no-cache add curl

echo "=====Elk config ========"

until echo | nc -z -v elasticsearch 9200; do

echo "Waiting Elk Kibana to start..."

sleep 2

done

code="400"

until [ "$code" != "400" ]; do

echo "=====Elk importing mappings json ======="

curl -v -XPUT elasticsearch:9200/_template/template_filebeat -H 'Content-Type: application/json' -d @/usr/share/elkconfig/config/template_filebeat.json 2>/dev/null | head -n 1 | cut -d ':' -f2|cut -d ',' -f1 > code.txt

code=`cat code.txt`

sleep 2

done

#reload index for geo_point

echo "=====Get kibana idnex lists ======="

indexlists=()

while [ ${#indexlists[@]} -eq 0 ]

do

sleep 2

indexlists=($(curl -s elasticsearch:9200/_aliases?pretty=true | awk -F\" '!/aliases/ && $2 != "" {print $2}' | grep filebeat-))

done

sleep 10

#========kibana=========

id="f1836c20-e880-11e8-8d66-7d7b4c3a5906"

echo "=====Kibana default index-pattern ========"

until echo | nc -z -v kibana 5601; do

echo "Waiting for Kibana to start..."

sleep 2

done

code="400"

until [ "$code" != "400" ]; do

echo "=====kibana importing json ======="

curl -v -XPOST kibana:5601/api/kibana/dashboards/import?force=true -H "kbn-xsrf:true" -H "Content-type:application/json" -d @/usr/share/elkconfig/config/index-pattern-export.json 2>/dev/null | head -n 1 | cut -d ':' -f2|cut -d ',' -f1 > code.txt

code=`cat code.txt`

sleep 2

done

code="400"

until [ "$code" != "400" ]; do

curl -v -XPOST kibana:5601/api/kibana/settings/defaultIndex -H "kbn-xsrf:true" -H "Content-Type: application/json" -d "{\"value\": \"$id\"}" 2>/dev/null | head -n 1 | cut -d ':' -f2|cut -d ',' -f1 > code.txt

code=`cat code.txt`

sleep 2

done

tail -f /dev/null

.template_filebeat template_filebeat.json

* template_filebeat.json is from

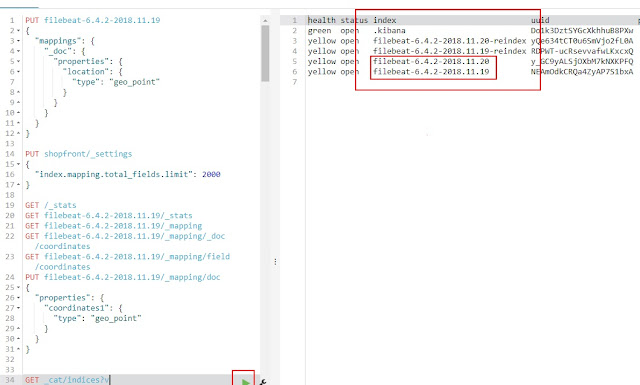

GET _cat/indices?v

you can see some index like //GET _cat/indices?v&s=index

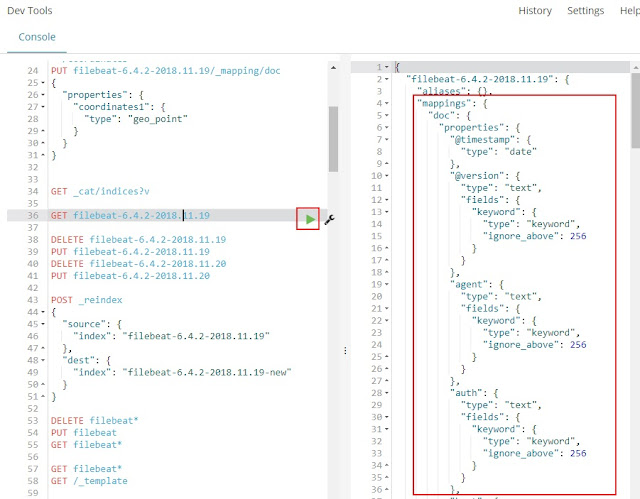

GET filebeat-6.4.2-2018.11.19

ok use your mappings replace this mappings

{

"index_patterns": ["filebeat*"],

"settings": {

"number_of_shards": 1

},

"mappings": {

"doc": {

"properties": {

"@timestamp": {

"type": "date"

},

...

}

Only replace mappings. Official website have example.

https://www.elastic.co/guide/en/elasticsearch/reference/current/indices-templates.html

And Change

“coordinates”: {

“type”: “float” => “geo_point”

},

Save file name:template_filebeat.json

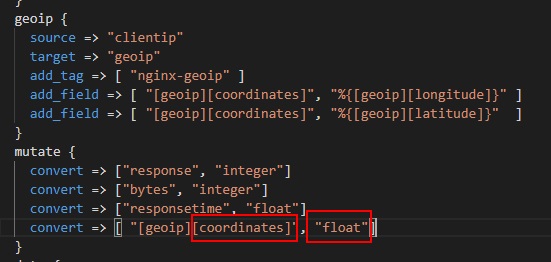

Usually new docker elk logstash already have geoip. add_field like picture and mutate add some item. Here is change type with templates.

So this step mean you must let logstash send log to elk, get fileds to become template.

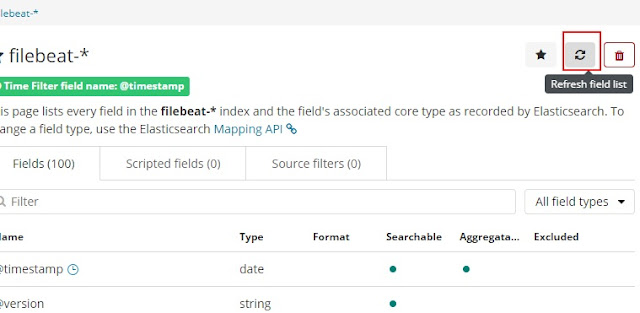

.index-partten index-pattern-export.json

see this url, know how to do

https://sueboy.blogspot.com/2018/11/kibana-default-index-pattern.html

Important:Do this must refresh, then export json that is corrent file.

Now 100% can see map.